Betty Documentation¶

Introduction¶

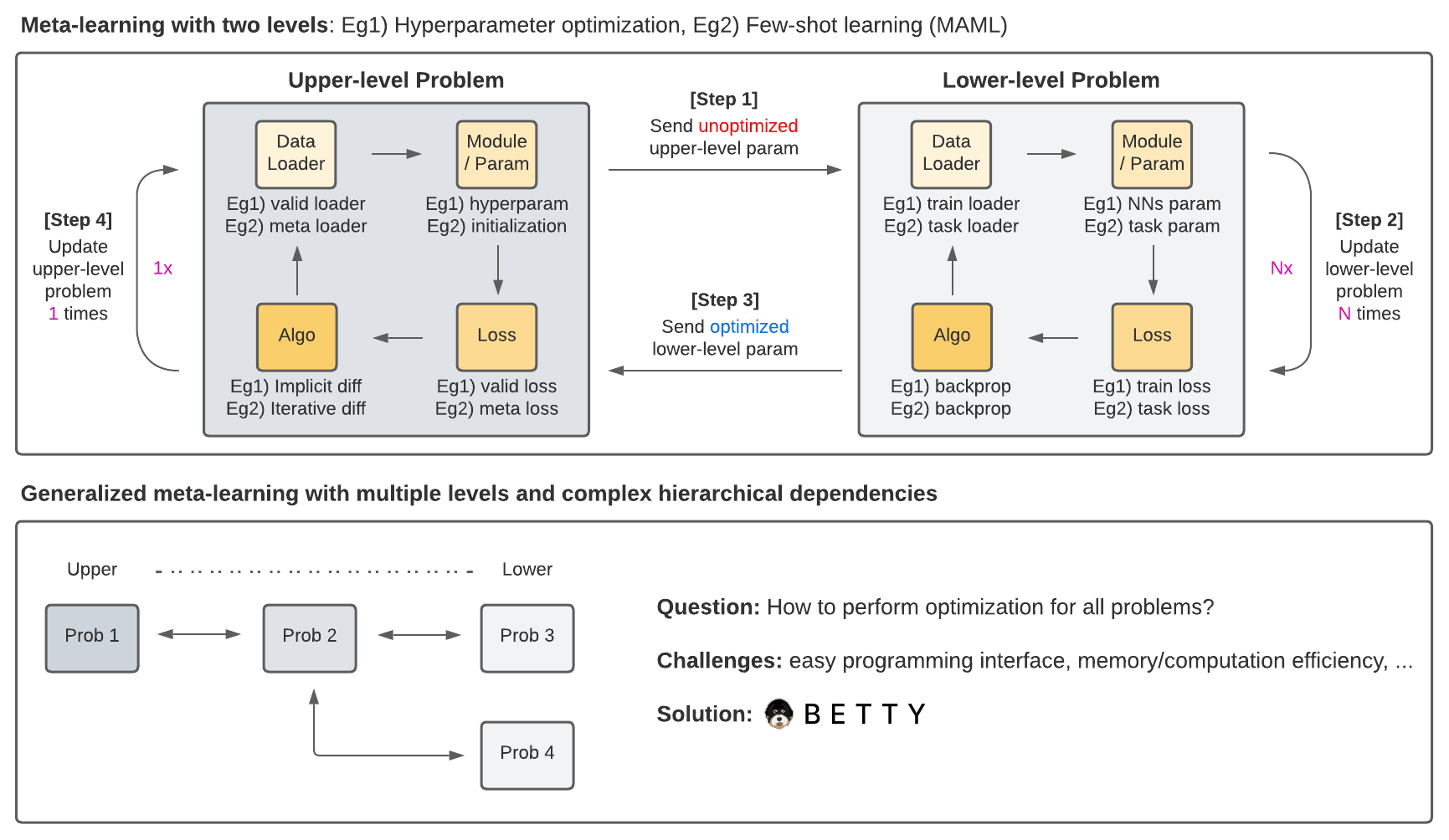

Betty is a PyTorch library for generalized-meta learning (GML) and multilevel optimization (MLO) that provides a unified programming interface for a number of GML/MLO applications including meta-learning, hyperparameter optimization, neural architecture search, data reweighting, adversarial learning, and reinforcement learning.

Benefits¶

Implementing generalized meta-learning and multilevel optimization is notoriously complicated. For example, it requires approximating gradients using iterative/implicit differentiation, and writing nested for-loops to handle hierarchical dependencies between multiple levels.

Betty aims to abstract away low-level implementation details behind its API, while allowing users to write only high-level declarative code. Now, users simply need to do two things to implement any GML/MLO program:

Define each level’s optimization problem using the Problem class.

Define the hierarchical problem structure using the Engine class.

From here, Betty performs automatic differentiation for the MLO program, choosing from a set of provided gradient approximation methods, in order to carry out robust, high-performance GML/MLO.

Applications¶

Betty can be used for implementing a wide range of GML/MLO applications including hyperparameter optimization, neural architecture serach, data reweighting, etc. We provide several reference implementation examples for:

While each of above examples traditionally have distinct implementation styles, Betty allows for common code structures and autodiff routines to be used in all examples. We plan to implement more GML/MLO applications in the future.

Getting Started¶

Below is a figure that illustrates the main concepts of GML/MLO.

Figure 1. Visual illustration of the main concepts of GML/MLO.¶